Create and Run an HPO

This topics explains how to create and run an HPO using the Myst Platform

Creating an HPO

In addition to supporting the deployment of a Networks of Time Series (NoTS) and backtests, the Myst Platform also supports model hyperparameter optimization (HPO). HPO allows you to test many different model hyperparameters for a historical period of time. You can analyze the results of an HPO in our client library to help you decide the optimal hyperparameters for your models.

To begin creating an HPO, first log in to the Myst Platform and create a new Project or select an existing Project. Then make you sure you have created a NoTS with a Model you want to run with HPO.

Guardrails

HPOs will time out after six (6) hours. Any trials that complete within six (6) hours will be returned, and the platform will surface the best of those completed trials. All other trials will not complete.

To ensure that HPOs can complete in a reasonable amount of time, we have introduced the following guardrails for creating new HPOs:

- Maximum number of concurrent HPO runs (i.e. jobs) per project: 30

- Maximum number of concurrent HPO runs (i.e. jobs) per user (across all projects): 5

- Maximum number of trials per HPO: 150

- Maximum number of concurrent trials per HPO: 5

Exceeding these limits will raise an error in the client library and in the Web Application. Deleted HPOs will not count against the limits.

Note: There are many other factors that impact HPO runtime: model parameters, feature count, HPO configuration, and characteristics of your dataset all play a role. Here are some tips for keeping runtime within a six-hour window:

- Reduce number of fit folds

- Reduce number of trials

- Reduce the size of your search space

- Use a smaller test period

- Use only as much training history as helps accuracy

- Use a coarser sample period (The fifteen minute market may not need to be forecasted at 15 minute granularity - 1 hour granularity can perform comparably in many cases.)

- Use subsampling parameters for GBDTs (These can improve accuracy, as well!)

- Lower num_boost_round for GBDTs in early development

- Lower max_epochs for neural networks in early development

HPOs, deployment, and immutability

Note that when you run an HPO in a Project that has not been deployed yet, the nodes in your NoTS will become immutable, which is achieved by creating an inactive Deployment in the background. Note that since the Deployment is inactive, this does not activate any of your Policies.

As a workflow, you can do the following, in order:

- Create a Project with a Model with which you would like to run an HPO

- Run one or more HPOs, which will make your NoTS immutable

- Copy the Project, update hyperparameter values, and add policies

- Deploy the new Project

Web Application

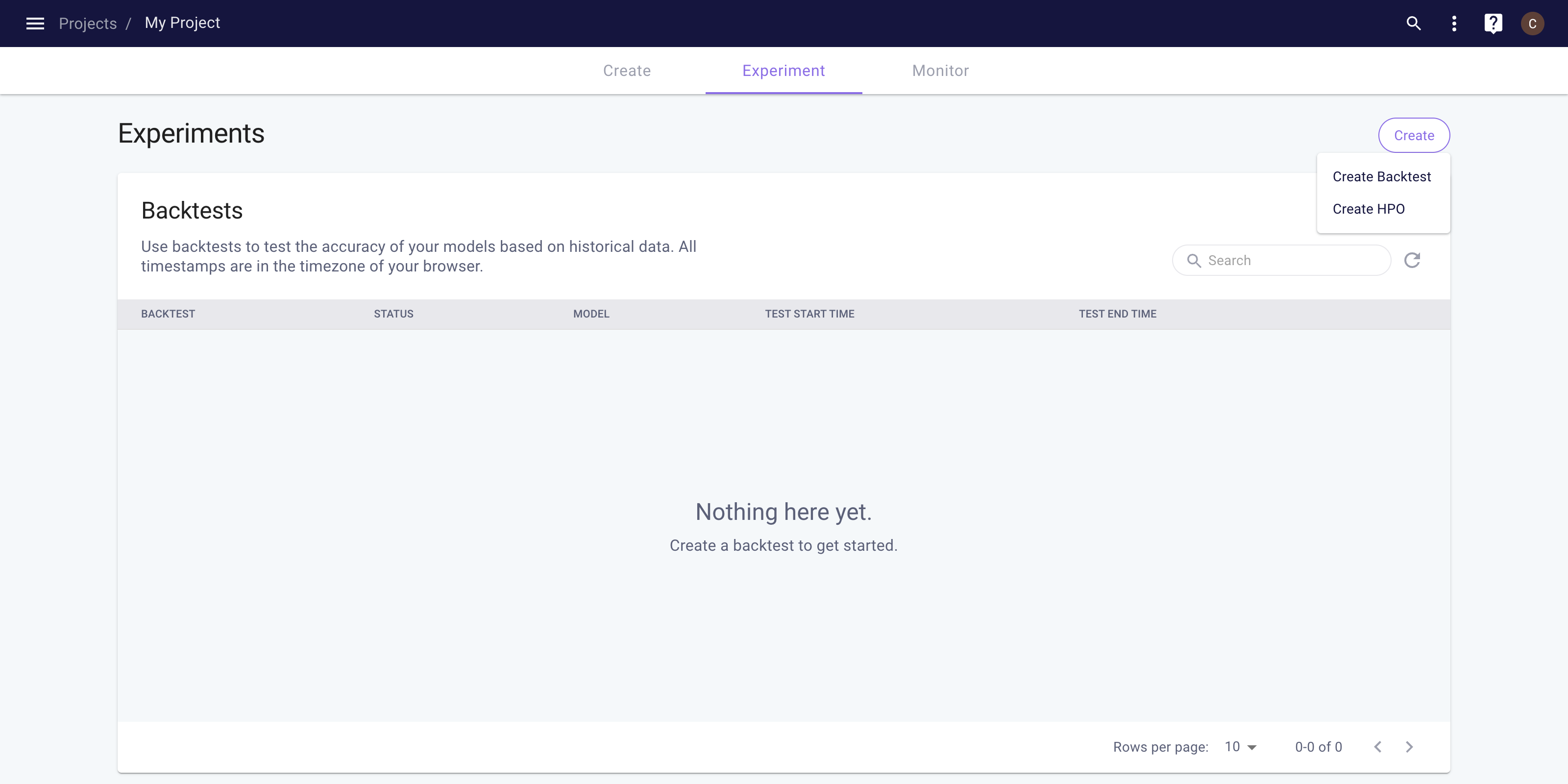

From the Project Experiment page, you can view the existing HPOs in your Project.

Project Experiment space

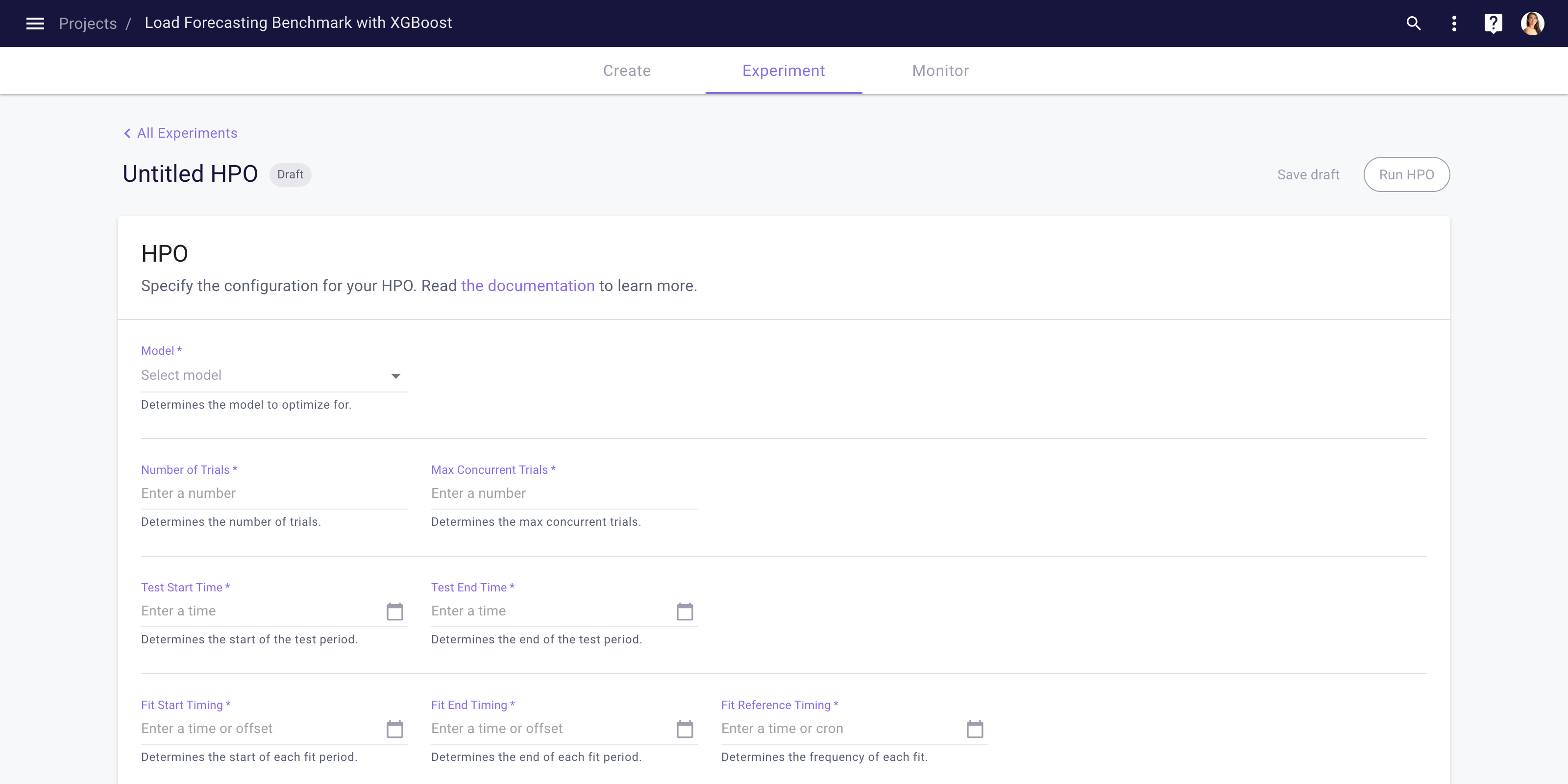

To create a new HPO, click the Create button on the top right of the Experiment tab, then click the Create HPO button. This will open the HPO detail page, which allows you to configure your Backtest. See our HPO page for details on parameters and timing. The parameters are very similar to the parameters in backtests, as HPO is a series of backtests with different hyperparameter values.

HPO details page

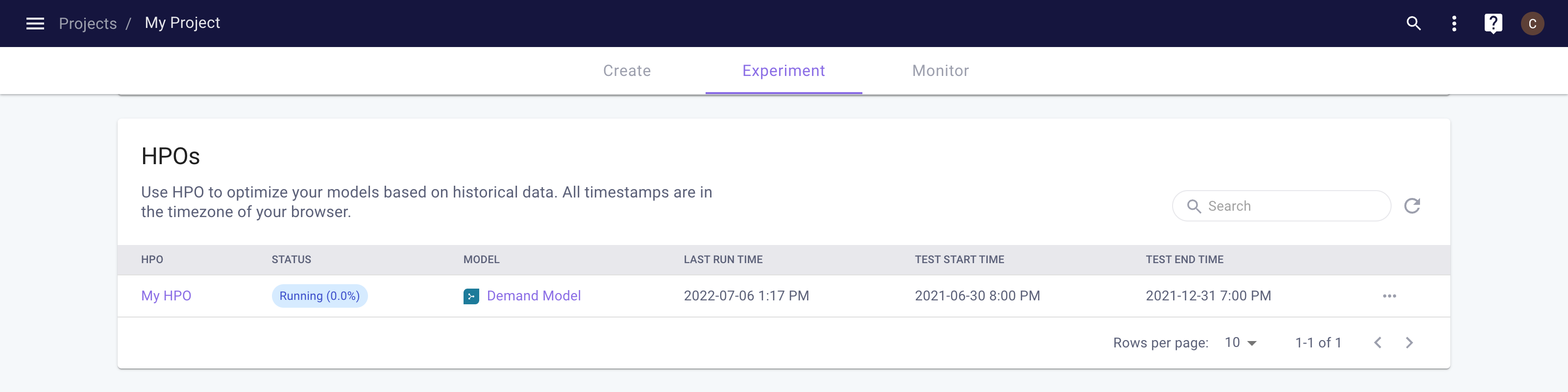

Next, click the Save Draft button to save the HPO as a draft. Once saved, you will be able to run the HPO by clicking the Run HPO button. This will kick off the HPO. You can track the progress of your HPO by navigating back to the HPO table (beneath the backtests table on the Experiments page). The percentage complete represents the number of trials completed out of the total number of trials (as specified in the HPO parameters).

HPO Progress

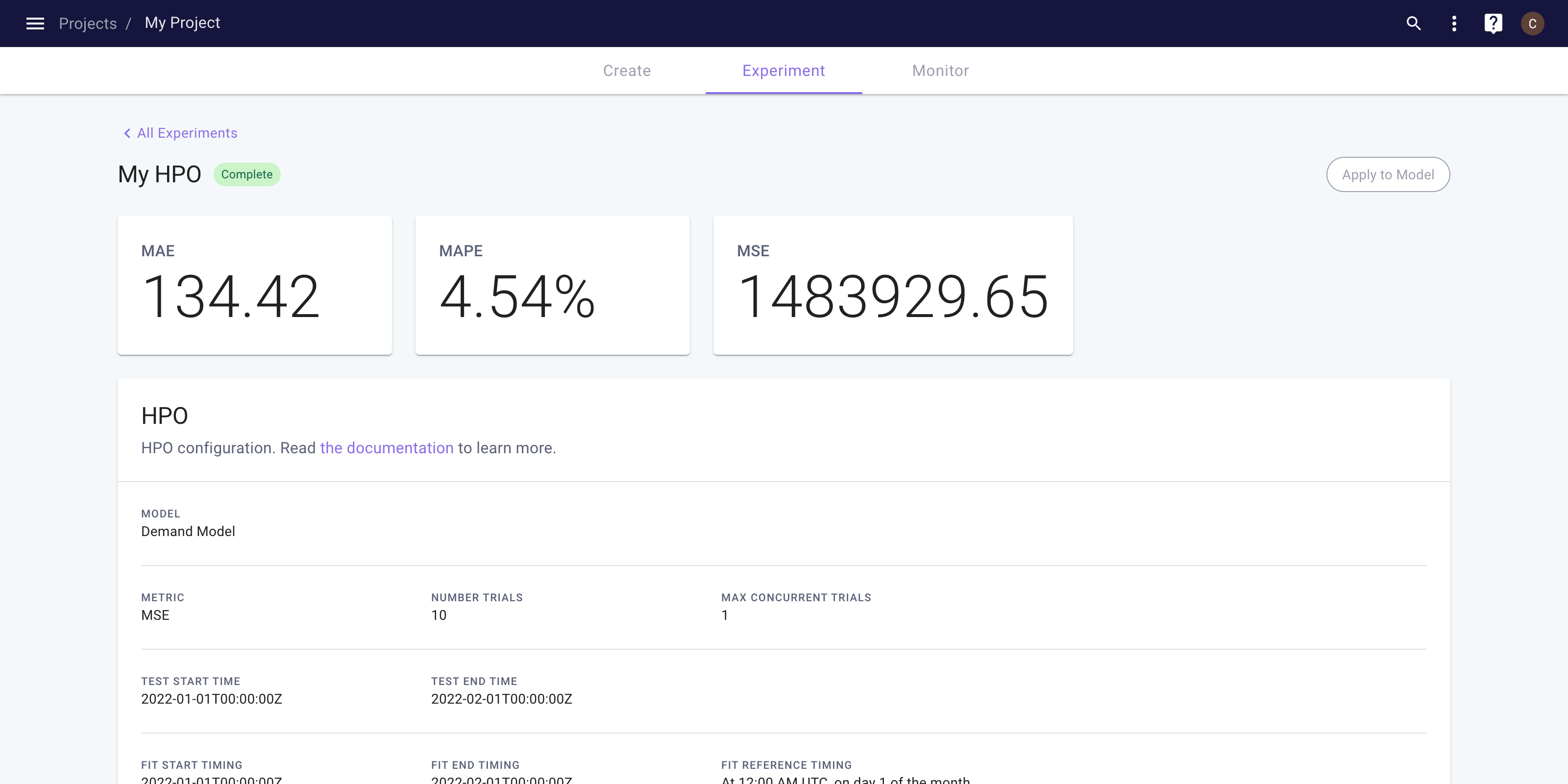

Once completed, you can view the results by clicking on the Backtest. This takes you to the Backtest detail page, which shows three accuracy metrics. To analyze the Backtest result in more detail, see this section.

HPO Results

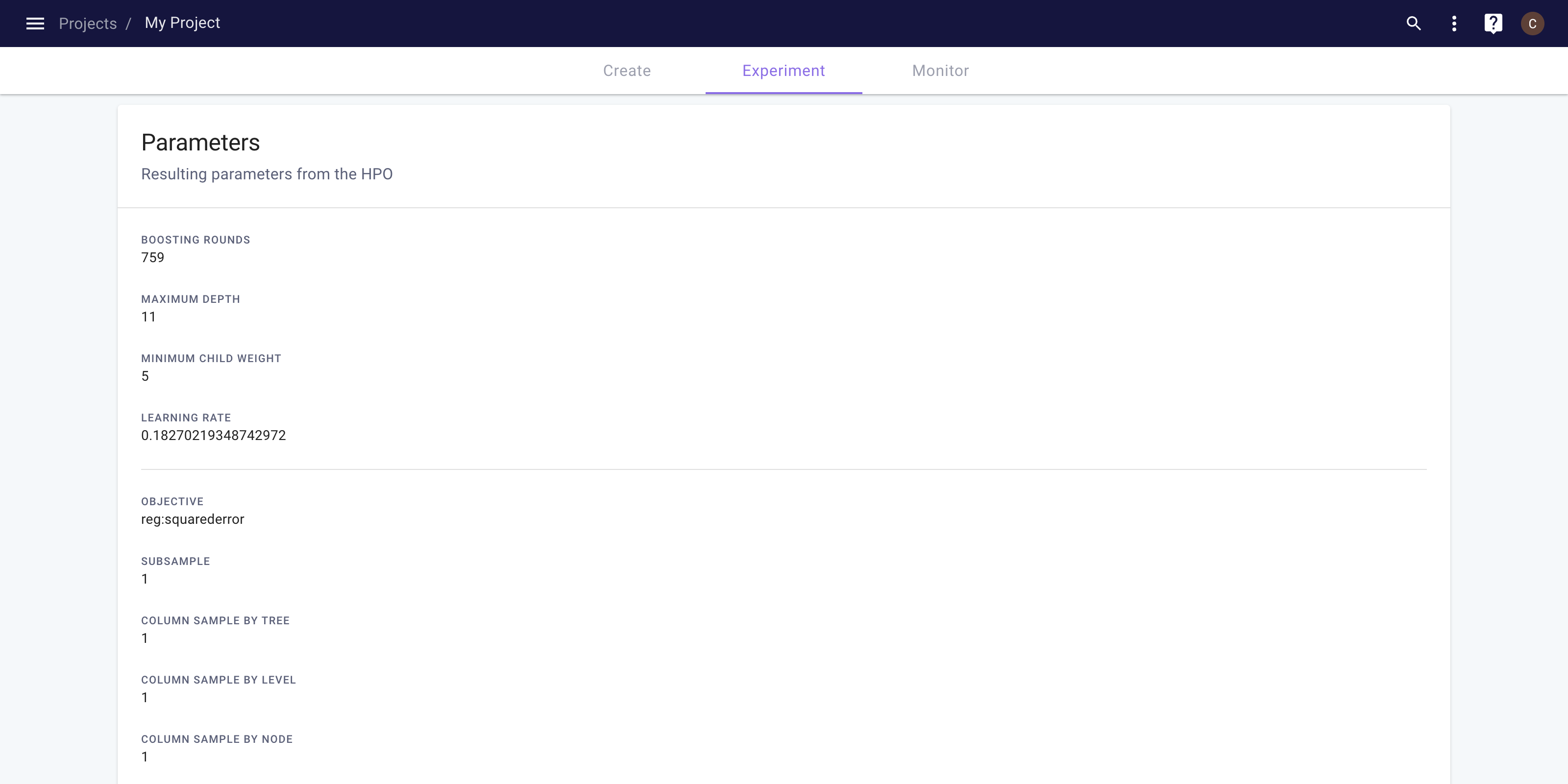

You can scroll down the page to see resulting hyperparameter values. The first section lists the parameters optimized in the HPO. The second section lists the other, non-optimized parameters.

HPO Parameter Results

Client Library

To create and run a HPO in the client library, you can use the following code. Users familiar with backtesting should see many similarities.

import myst

myst.authenticate()

# Use an existing project.

project = myst.Project.get(uuid="<uuid>")

# Use an existing model within the project.

model = myst.Model.get(project=..., uuid="<uuid>")

# Create an HPO.

hpo = myst.HPO.create(

project=project,

title="My HPO",

model=model,

test_start_time=myst.Time("2021-07-01T00:00:00Z"),

test_end_time=myst.Time("2022-01-01T00:00:00Z"),

fit_start_timing=myst.TimeDelta("-P1M"),

fit_end_timing=myst.TimeDelta("-PT24H"),

fit_reference_timing=myst.CronTiming(cron_expression="0 0 * * 1"),

predict_start_timing=myst.TimeDelta("PT1H"),

predict_end_timing=myst.TimeDelta("PT24H"),

predict_reference_timing=myst.CronTiming(cron_expression="0 0 * * *"),

search_space={

"num_boost_round": myst.hpo.LogUniform(lower=100, upper=1000),

"max_depth": myst.hpo.QUniform(lower=1, upper=12, q=1),

"learning_rate": myst.hpo.LogUniform(lower=0.005, upper=0.2),

"min_child_weight": myst.hpo.QUniform(lower=0, upper=100, q=5),

},

search_algorithm=myst.hpo.Hyperopt(num_trials=30, max_concurrent_trials=3),

)

# Run the HPO.

hpo.run()

Analyze HPO Results

Once an HPO has completed, you can analyze the result of the HPO using the Myst Platform client library.

Client Library

To extract the result of an HPO in the client library, you can use the following code.

import myst

myst.authenticate()

# Use an existing project.

project = myst.Project.get(uuid="<uuid>")

# Use existing HPO within the project

hpo = myst.HPO.get(project=..., uuid="<uuid>")

# Wait until the HPO is complete.

hpo.wait_until_completed()

# Get the result of the completed HPO.

hpo_result = hpo.get_result()

Once you have extracted your HPO result, you can use the following code to generate metrics.

# Extract the best trial from the HPO result.

best_trial = hpo_result.best_trial

# Access the optimized parameters for the best trial.

best_trial.parameters

# Access the pre-computed metrics for the best trial.

best_trial.metrics

Updated over 3 years ago